A neuromorphic computing architecture that can run some deep neural networks more efficiently

[ad_1]

As synthetic intelligence and deep learning techniques turn into increasingly highly developed, engineers will need to generate components that can run their computations both of those reliably and competently. Neuromorphic computing components, which is inspired by the structure and biology of the human mind, could be significantly promising for supporting the operation of subtle deep neural networks (DNNs).

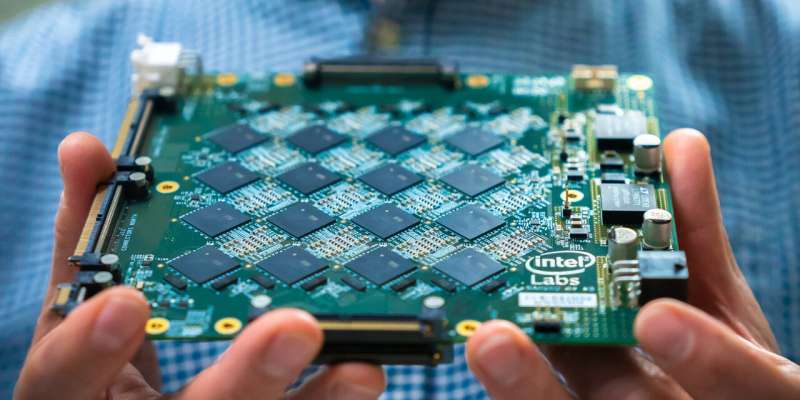

Researchers at Graz University of Technologies and Intel have a short while ago demonstrated the huge likely of neuromorphic computing components for running DNNs in an experimental placing. Their paper, posted in Nature Machine Intelligence and funded by the Human Brain Challenge (HBP), reveals that neuromorphic computing hardware could operate substantial DNNs 4 to 16 periods a lot more effectively than typical (i.e., non-mind motivated) computing hardware.

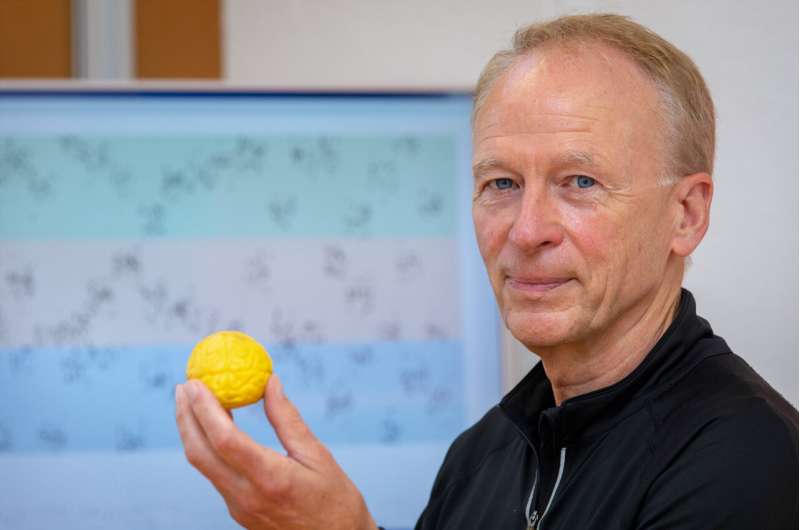

“We have shown that a substantial course of DNNs, all those that approach temporally extended inputs these kinds of as for illustration sentences, can be applied significantly extra electrical power-efficiently if a single solves the exact same problems on neuromorphic hardware with brain-motivated neurons and neural network architectures,” Wolfgang Maass, one of the scientists who carried out the review, advised TechXplore. “Also, the DNNs that we considered are vital for better level cognitive function, this sort of as locating relations among sentences in a story and answering issues about its material.”

In their checks, Maass and his colleagues evaluated the energy-performance of a significant neural community operating on a neuromorphic computing chip established by Intel. This DNN was particularly created to procedure large letter or digit sequences, this sort of as sentences.

The researchers measured the vitality consumption of the Intel neuromorphic chip and a regular pc chip even though managing this identical DNN and then when compared their performances. Curiously, the scientists discovered that adapting the neuron versions contained in computer system hardware so that they resembled neurons in the brain enabled new useful qualities of the DNN, enhancing its electrical power-effectiveness.

“Increased energy effectiveness of neuromorphic hardware has generally been conjectured, but it was tricky to demonstrate for demanding AI jobs,” Maass stated. “The reason is that if a person replaces the artificial neuron types that are employed by DNNs in AI, which are activated 10s of 1000’s of instances and additional for every 2nd, with more brain-like ‘lazy’ and consequently much more strength-economical spiking neurons that resemble all those in the brain, a person commonly experienced to make the spiking neurons hyperactive, considerably much more than neurons in the brain (exactly where an ordinary neuron emits only a handful of instances for each 2nd a signal). These hyperactive neurons, nevertheless, consumed as well a lot electrical power.”

Several neurons in the mind require an extended resting interval right after currently being lively for a whilst. Previous experiments aimed at replicating organic neural dynamics in hardware usually achieved disappointing final results thanks to the hyperactivity of the synthetic neurons, which eaten also a lot electricity when working specifically big and sophisticated DNNs.

In their experiments, Maass and his colleagues showed that the tendency of numerous biological neurons to rest soon after spiking could be replicated in neuromorphic components and utilised as a “computational trick” to address time sequence processing jobs more efficiently. In these tasks, new details demands to be combined with information and facts gathered in the latest earlier (e.g., sentences from a story that the community processed beforehand).

“We confirmed that the community just needs to test which neurons are at the moment most tired, i.e., reluctant to hearth, since these are the types that were being energetic in the current past,” Maass mentioned. “Working with this technique, a intelligent community can reconstruct primarily based on what details was not too long ago processed. As a result, ‘laziness’ can have pros in computing.”

The scientists demonstrated that when running the very same DNN, Intel’s neuromorphic computing chip consumed 4 to 16 times much less strength than a standard chip. In addition, they outlined the likelihood of leveraging the synthetic neurons’ absence of action after they spike, to appreciably make improvements to the hardware’s functionality on time sequence processing duties.

In the future, the Intel chip and the method proposed by Maass and his colleagues could assist to improve the efficiency of neuromorphic computing components in running substantial and sophisticated DNNs. In their future do the job, the team would also like to devise more bio-impressed strategies to greatly enhance the efficiency of neuromorphic chips, as present hardware only captures a very small fraction of the elaborate dynamics and functions of the human mind.

“For illustration, human brains can discover from viewing a scene or listening to a sentence just as soon as, whereas DNNs in AI call for too much teaching on zillions of illustrations,” Maass extra. “One trick that the mind works by using for speedy studying is to use unique learning solutions in unique components of the brain, while DNNs usually use just just one. In my upcoming research, I would like to empower neuromorphic hardware to create a ‘personal’ memory based mostly on its earlier ‘experiences,’ just like a human would, and use this personal practical experience to make far better selections.”

Demonstrating important power financial savings making use of neuromorphic hardware

Arjun Rao et al, A Lengthy Brief-Time period Memory for AI Programs in Spike-based Neuromorphic Components, Character Machine Intelligence (2022). DOI: 10.1038/s42256-022-00480-w

© 2022 Science X Community

Quotation:

A neuromorphic computing architecture that can run some deep neural networks extra effectively (2022, June 14)

retrieved 25 June 2022

from https://techxplore.com/information/2022-06-neuromorphic-architecture-deep-neural-networks.html

This doc is topic to copyright. Apart from any good dealing for the purpose of non-public study or exploration, no

portion may well be reproduced without the need of the written permission. The articles is delivered for details needs only.

[ad_2]

Resource connection